Modern GTM systems depend heavily on lead enrichment tools. Routing, scoring, segmentation, personalisation, and reporting all rely on enriched fields behaving consistently over time. Yet most teams still face the same problems: SDRs validating job titles manually, RevOps fixing schema drift, and marketing questioning whether segments are stable enough to run campaigns.

The issue isn’t a lack of data. It’s that lead enrichment tools follow different data philosophies—some prioritise scale, some emphasise firmographics, and others focus on workflow automation. And because each one structures and classifies data differently, even small variations can break the workflows that GTM teams depend on.

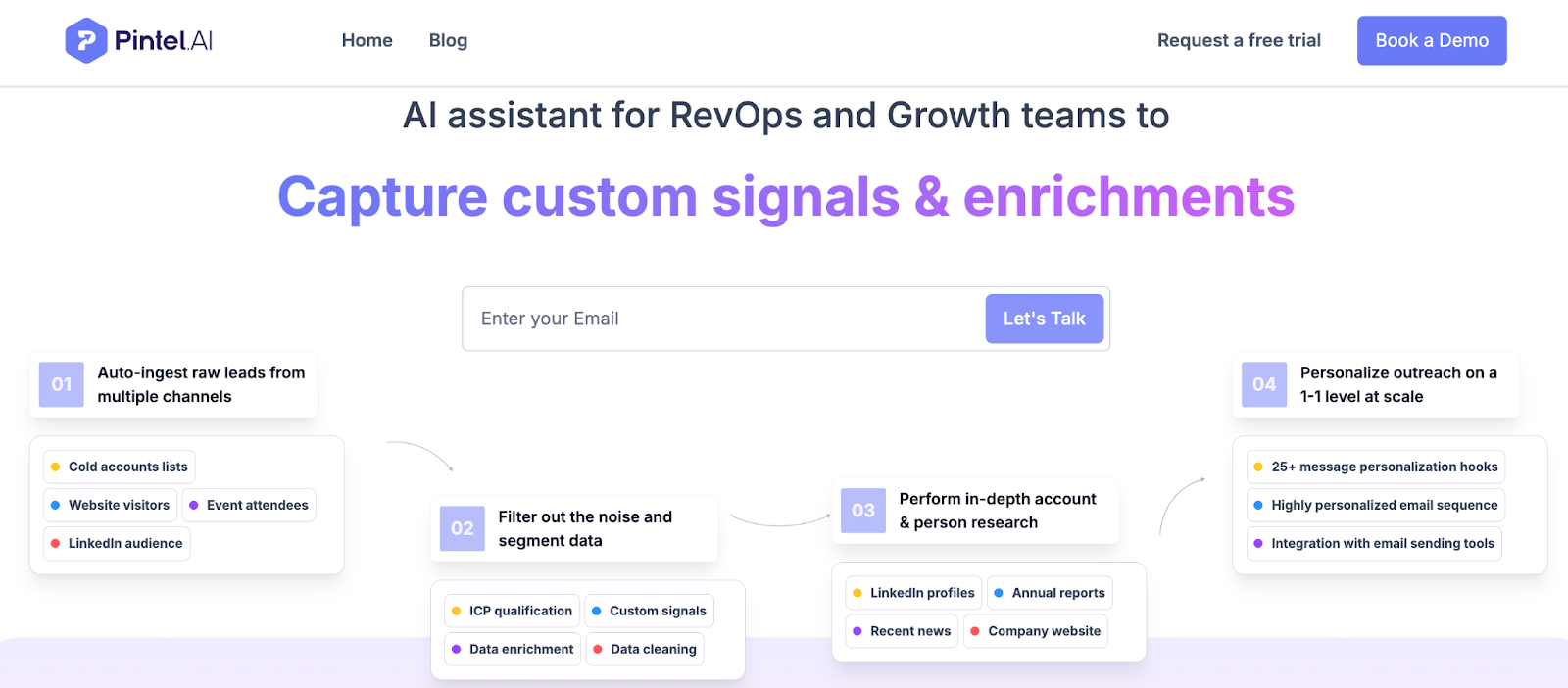

This comparison of Apollo, Clearbit, Clay, and Pintel.ai focuses on how these tools behave inside real outbound systems—not just the fields they append. For GTM leaders choosing a lead enrichment tool today, the real question is no longer “Who has the most data?” but “Whose data behaves predictably inside our workflows?”

Why Lead Enrichment Tools Often Break GTM Workflows

Lead enrichment tools used to be simple data appenders. As they became embedded into routing, scoring, and personalization logic, minor inconsistencies began introducing major operational friction.

Figure: Common Reasons Lead Enrichment Tools Break GTM Workflows

1. Instability in Titles, Functions, and Seniority

Many lead enrichment tools reinterpret titles during dataset refreshes. A “Head of Marketing” might become a “Marketing Lead,” shifting seniority, scoring, and routing logic. These variations accumulate and quietly destabilize GTM workflows.

2. Fragmentation Across Providers

Most teams enrich data across multiple sources—Apollo for sourcing, Clearbit for firmographics, Clay for workflows, and CRM plug-ins. Each provider uses different schemas, naming conventions, and classification logic. The result is segmentation drift, misaligned routing, and unpredictable scoring.

3. Over-Enrichment and Schema Noise

Some lead enrichment tools add too many fields or overly granular attributes. Others create new CRM fields automatically. This leads to CRM bloat, unclear field ownership, and brittle automation.

4. Unpredictable Refresh Cadence

Every provider updates its data at different intervals. These refresh cycles often introduce classification changes that break routing or scoring unexpectedly. Teams rarely notice the refresh—but they feel the downstream impact immediately.

5. Lack of Schema Discipline Across Tools

When enrichment tools don’t understand or respect CRM schema structure, they produce conflicting fields, overwrite critical values, or create new attributes without governance. This gradually erodes segmentation logic, reporting accuracy, and overall data trust.

Modern GTM teams aren’t struggling because enrichment lacks data—they struggle because enrichment lacks discipline.

What GTM Teams Expect From Lead Enrichment Tools

Teams now evaluate enrichment tools based on predictability, workflow alignment, and operational stability. They expect:

- stable title → function → seniority mapping

- consistent firmographics

- schema-safe enrichment

- predictable behaviour across cycles

- complete profiles without extra research

- reduced RevOps maintenance

- routing- and scoring-compatible outputs

Below is a capability-level comparison of Apollo, Clearbit, Clay, and Pintel.ai, focusing on how their data behaves inside GTM workflows rather than how many fields they enrich.

| GTM Reality That Matters | Apollo | Clearbit | Clay | Pintel.ai |

|---|---|---|---|---|

| How qualification logic is applied | Title and firmographic filters defined per search | Predefined firmographic attributes | User-built logic via prompts and formulas | Embedded ICP agent applies standardized logic |

| What determines data accuracy | Database coverage and scrape freshness | Source authority at company level | Prompt quality + source selection | Task-specific agents with QA checks |

| Who carries the accuracy burden | SDR judgment | RevOps cleanup | RevOps / technical operator | System-level validation |

| What happens when data is incomplete | Gaps passed to SDRs | Person-level gaps common | User adds more sources or logic | Waterfall enrichment fills gaps sequentially |

| How errors are detected | Post-outreach or bounce reports | Downstream workflow failures | Manual row-by-row review | Confidence scoring flags exceptions |

| Operational effort to keep workflows stable | Ongoing manual review | Schema monitoring | Continuous prompt and pipeline maintenance | Minimal intervention after setup |

| Who the tool assumes as the user | Individual SDR | RevOps / Marketing Ops | Technical RevOps / Ops-heavy teams | Business users and RevOps |

Note: These comparisons are based on publicly available information, user reviews, and observed product behaviour. For the most accurate and updated details, please refer to each vendor’s official website or book a product demo.

Apollo: Scale and Speed With Variability in Classification

Apollo is one of the most widely used lead enrichment tools because it combines sourcing, enrichment, and sequencing in one environment. It’s excellent for high-volume outbound motions.

However, Apollo’s large scraped database introduces classification variability. Titles, functions, and seniority can shift between enrichment cycles. Firmographics may be broad or inconsistent. Apollo is effective for discovery, but not always reliable for workflows requiring stable fields.

Apollo works best for teams that:

- run high-volume outbound

- rely on SDR judgment

- can tolerate inconsistent classifications

- Prioritize speed over workflow precision

Pintel.ai: Stable Classifications and Complete Profiles With Predictable Behavior

Stable classifications across enrichment cycles

Unlike tools that reinterpret titles on every refresh, Pintel resolves title → function → seniority through a standardized interpretation layer. This keeps routing, scoring, and persona logic stable over time.

Predictable enrichment behavior inside GTM workflows

Pintel is designed around how enriched fields are used in CRMs and automation—not just appended. Outputs align cleanly with existing schemas, reducing routing drift and RevOps intervention.

Complete profiles even with limited public data

Where other tools degrade when LinkedIn or public data is sparse, Pintel fills gaps through contextual inference. This reduces manual SDR validation before outreach.

Waterfall enrichment to avoid schema conflicts

Rather than merging multiple sources at once, Pintel fills missing data sequentially. This avoids conflicting values and unnecessary CRM field expansion.

Research automation without Clay-level operational overhead

Pintel Agent automates persona identification and account context extraction, tasks typically built manually in Clay, without requiring custom prompts or ongoing workflow maintenance.

In practice, this means:

less manual review for SDRs, fewer schema fixes for RevOps, and enrichment data that behaves consistently across GTM systems.

Clearbit: Strong Firmographics With Limited Person-Level Depth

Clearbit excels at delivering clean, structured firmographic data. It’s widely used for segmentation, ICP filtering, scoring models, and ABM targeting because its company-level signals are consistent and stable over time.

Where Clearbit shows limitations is at the person level. Title normalization, function mapping, and seniority depth are intentionally lightweight. Refresh cycles focus more on company attributes than on role interpretation, which means routing and persona logic often still depend on SDR judgment or downstream tooling.

In practice, Clearbit strengthens who to target, but contributes less clarity on who exactly to message within the account.

Clearbit works best for teams that

- rely heavily on firmographic precision

- operate scoring or segmentation-heavy GTM systems

- run targeted outbound or ABM motions

- require stable company-level data structures more than persona depth

Clay: Workflow Flexibility With Operational Complexity

Clay gives teams the ability to design highly customized enrichment workflows by chaining multiple data sources, transformations, and logic layers. For RevOps teams that want full control, this flexibility is a major advantage.

However, that flexibility shifts responsibility onto the operator. Data conflicts, schema mismatches, API changes, and prompt drift are not edge cases, they are expected maintenance work. As workflows grow, teams spend increasing time monitoring pipelines rather than improving GTM strategy.

In practice, Clay excels at building bespoke enrichment systems, but time to value and long-term stability depend heavily on technical RevOps capacity.

Clay works best for teams that

- need multi-source enrichment across niche datasets

- have strong technical RevOps or Ops engineering support

- can maintain pipelines and logic over time

- prefer customization even if it increases operational overhead

When Each Lead Enrichment Tool Makes Sense

Each lead enrichment tool reflects a different philosophy.

- Choose Apollo for scale and high-volume outbound.

- Choose Pintel for stable contact-level classification with predictable firmographic and technographic data.

- Choose Clearbit for firmographic accuracy and segmentation.

- Choose Clay for customizable enrichment workflows.

Choosing a Lead Enrichment Tool Means Choosing a Data Philosophy

Lead enrichment decisions shape far more than data coverage. They shape how reliably a GTM system functions over time.

As enrichment becomes embedded in routing, scoring, segmentation, and personalization, small inconsistencies stop being tolerable. Titles that shift, classifications that drift, and schemas that sprawl do not just create noise. They compound into broken workflows, manual fixes, and eroding trust in data.

The real question for GTM leaders is no longer how much data a tool can append. It is whether enriched data behaves consistently enough to be depended on across automation, reporting, and decision making. In modern GTM systems, stability is not a nice to have. It is the foundation that keeps everything else working.

Choosing a lead enrichment tool is ultimately choosing the kind of system you want to operate. One that constantly needs human correction, or one that remains reliable as scale increases.

FAQ: What GTM Leaders Ask About Lead Enrichment Tools

Why do lead enrichment tools create inconsistent routing outcomes?

Because each provider classifies titles, functions, industries, and seniority differently. When those fields shift between cycles, routing rules misfire.

Why do multi-source enrichment setups break more often?

Different sources use different schemas and definitions. Without strict normalization, conflicts accumulate, creating operational instability.

Which lead enrichment tool is most reliable for title → function → seniority mapping?

Pintel provides the most consistent classification across cycles. Apollo varies, Clearbit is basic, and Clay depends entirely on the sources used.

What happens when a prospect has no LinkedIn or a limited online presence?

Most enrichment tools degrade in accuracy. Pintel maintains profile completeness through contextual inference rather than relying solely on public data.

Do lead enrichment tools actually improve CRM data quality?

Only when they maintain schema discipline, tools that over-enrich or create new fields automatically can degrade CRM data quality.

Why does enriched data drift over time, even with the same provider?

Model updates, dataset refreshes, and source changes cause reinterpretation of fields. This is normal for many enrichment engines, but problematic for automated workflows.

Should teams use multiple lead enrichment tools together?

It depends. Multi-source enrichment expands coverage but increases conflicts and RevOps workload. Single-source enrichment is more predictable.

What’s the most overlooked factor when choosing a lead enrichment tool?

Predictability. Most teams evaluate coverage, but the biggest workflow failures come from unstable classifications.

Can enrichment fully eliminate SDR research?

Not entirely. But tools with stable classifications and complete profiles reduce manual validation significantly.